.: Team Hillarious :.

The UW CSE Cyber Defense Team

DC20 CTF Quals Writeups: /urandom 400

By supersat

When we first looked at this binary, it was pretty obvious that it was a Kinect application. There were some interesting strings inside ("User::PoseDetection" and "No depth generator found." were some of the more helpful clues), and if you tried to run it, you'd get an interesting error:

$ ./urand400-6180b91709d3e66879254315b8e0734c ./urand400-6180b91709d3e66879254315b8e0734c: error while loading shared libraries: libOpenNI.so: cannot open shared object file: No such file or directoryHmmmm! OpenNI is a library for "Natural Interface" devices, and is used in a number of Kinect hacks.

At this point, our team started scrambling to find a Kinect at 8 AM on a Sunday and set up OpenNI. However, once leshi and I heard what they were up to, we headed over to UW's Robotics and State Estimation Lab, where they've been working with Kinect-like sensors for at least a few years now.

Before we could actually run the program, there was one more small hoop to jump through. We needed to create an OpenNI configuration file named SamplesConfig.xml. Thankfully, a quick Google search brought up two sample files that we basically mashed together. We needed to define a depth source, and since we figured it was going to be doing some gesture recognition, we enabled the User, Hands, Gesture, and Scene nodes.

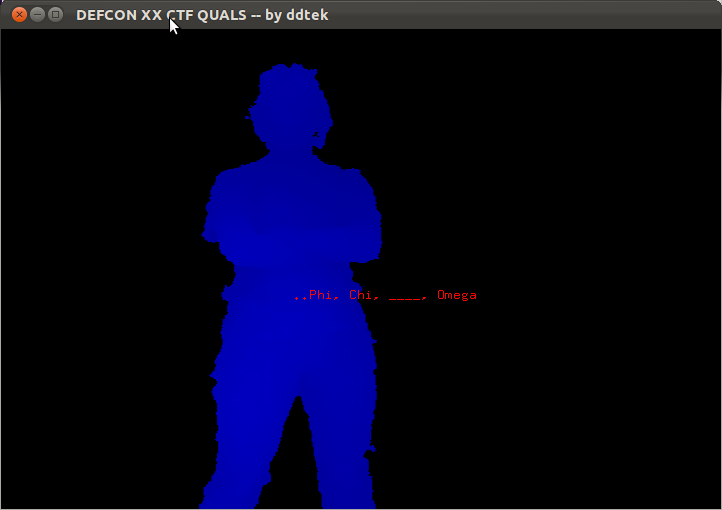

Once we got the app up and detecting people, we were treated with this challenge:

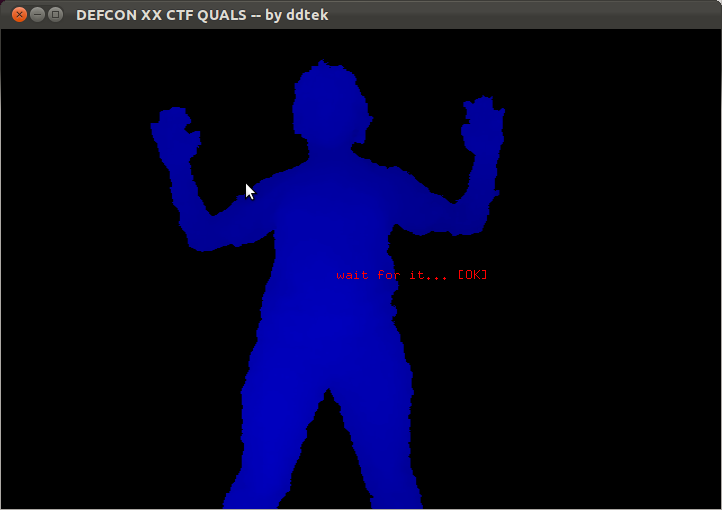

A quick Google search later and we found out that Psi was the missing letter in the sequence. As it turns out, there's a "Psi pose" that's used to calibrate OpenNI. Once we made the Psi pose, we saw something like this:

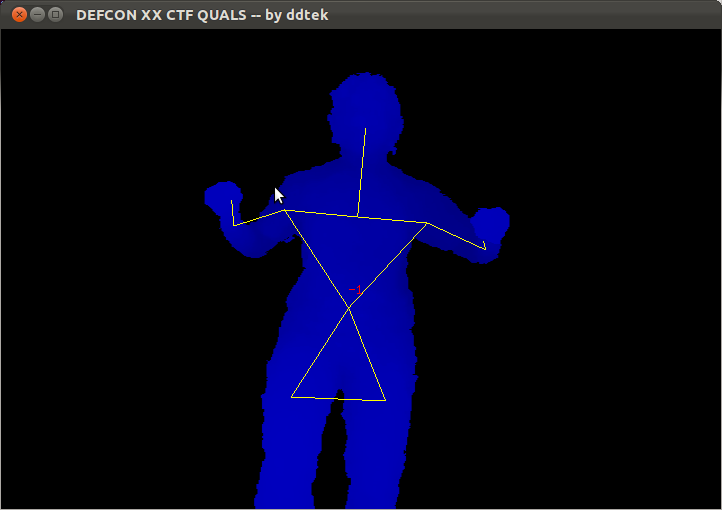

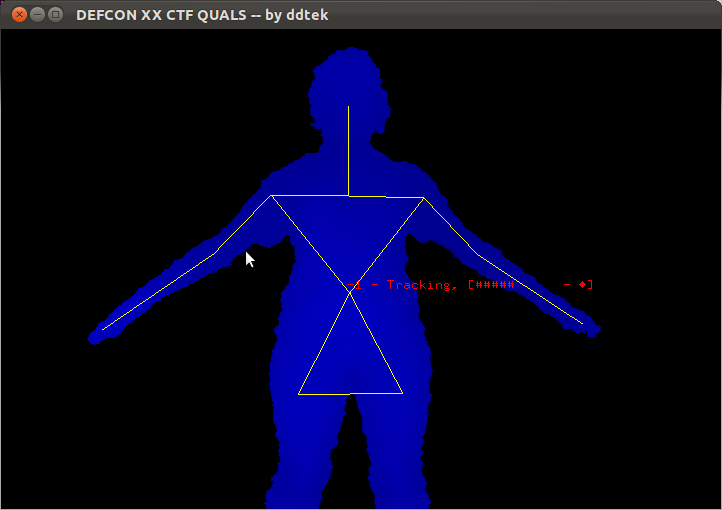

Kinda looks like a TSA pornoscan, no? After holding this silly pose for several seconds, the console said "Calibration complete, start tracking user 1." We then saw something like this:

We thought we needed to make various gestures to unlock the key, so we investigated what other gestures OpenNI recognizes. Unfortunately, the Psi pose is the only one built-in. So, we started doing whatever gestures came to mind (including those involving multiple people) and observed the results. Sometimes, we would get a screen like this:

However, there didn't seem to be much rhyme or reason to what was going on. We were also extremely disappointed that ddtek did not include a "sheep" gesture detector. I'll leave the details of that particular gesture to your imagination.

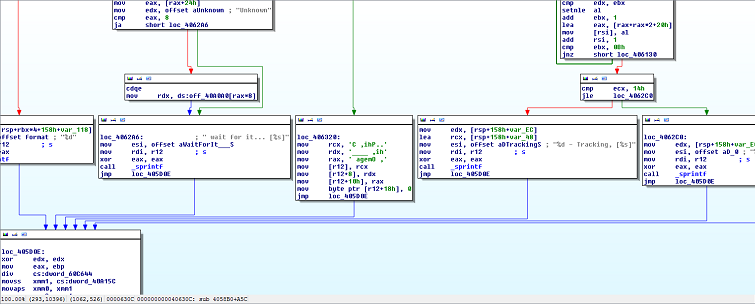

Getting frustrated and tired of flailing about in front of the Kinect (which we hoped wasn't streamed to ddtek in real time), it was time to open IDA! We looked at xrefs to the "Tracking" string, since that seemed the most random to us. What we found looked rather interesting:

Huh! That looks like the main loop! And over to the right is a branch that will print "you got it, that's all folks!" We began to track back what was necessary to hit this branch. Basically, the word at 0x6164C0 (which I'll call "magicNumber") needed to be greater than 0x1d while tracking a user. But how does magicNumber get incremented? xrefs to magicNumber pointed to one function responsible, located at 0x404D40. I'll call this function "challengeComputation."

Unfortunately, challengeComputation was tricky to reverse engineer. It made a call to a really hairy function, which itself called several other ugly functions a lot. This is when I decided to return to my old friend, Google.

Searching for some of the key strings (e.g. "%d - Tracking") brought up documentation for one of OpenNI's samples. And samples mean source! Unfortunately, the only user tracker sample was in Java. WTF? But with a bit more digging, I found the source to the C++ version. Jackpot! Now all we needed to do is figure out what ddtek changed...

As it turns out, the app is mostly unchanged. They changed some strings (namely, "looking for pose" and "calibrating") in SceneDrawer.cpp:DrawDepthMap, but the real addition was the call to challengeComputation between some of the DrawLimb calls. We were able to tease some more information out of comparing the source to the assembly, such as the fact that challengeComputation would retrieve the XN_SKEL_LEFT_SHOULDER, XN_SKEL_LEFT_HAND, XN_SKEL_RIGHT_SHOULDER, and XN_SKEL_RIGHT_HAND coordinates and pass them to more ugly functions.

At this point, we ran the app under gdb and observed the behavior of various functions. An array at 0x6164E0 (which I called magic3) would get filled in different ways depending the result of calls to different functions, which we hypothesized were gesture recognizers. The number of leading 0 words in magic3 is counted by challengeComputation, which compares it with a number stored in an array at 0x60C660 (which I called "wtfArray").

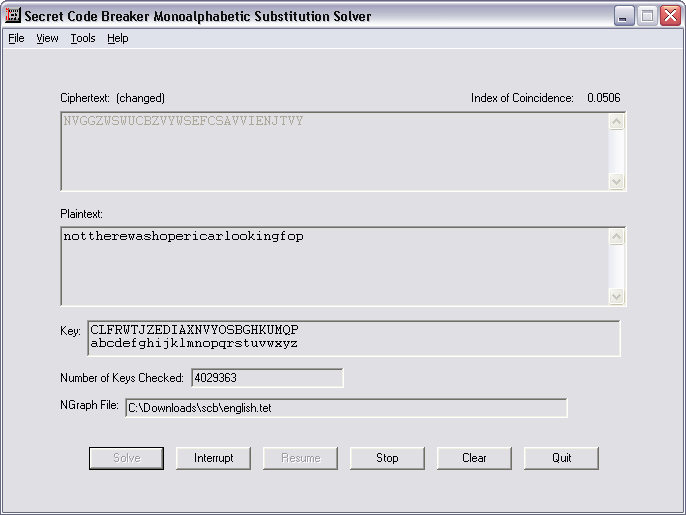

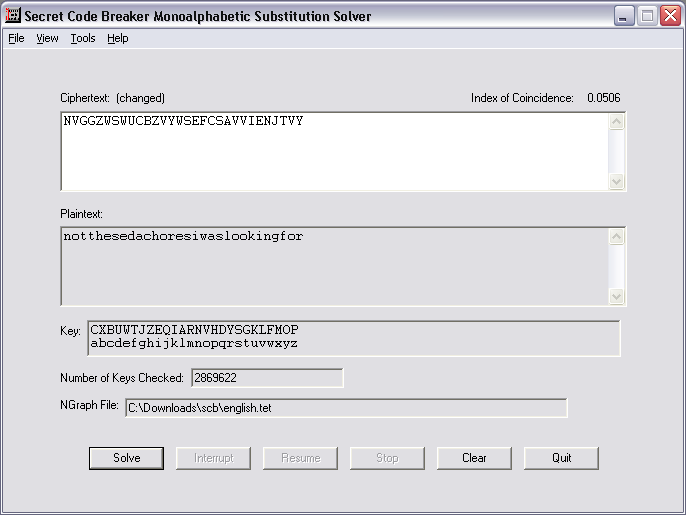

I then made a couple of interesting observations. First, magicNumber is used as an index into wtfArray. Second, there can't be more than 26 0s in magic3. Hmm... Could that mean wtfArray is a list of letters that map to gestures? I did the most obvious thing and mapped the numbers in the index to letters, and got the lovely string "NVGGZWSWUCBZVYWSEFCSAVVIENJTVY." Of course, there's no reason the letters are mapped in a sane way. Finding the right mapping is basically solving a substitution cipher, and luckily there are apps out there that use letter frequency analysis to determine the mapping. Plugging this string into Chris Card's secret code breaker gave something pretty astonishing:

One of the interesting features of this program is that you can force particular letter mappings. I knew the last letter had to be an "r," so I forced Y to map to r. That got us even closer:

At this point, leshi guessed that "sedachores" was actually "semaphores," giving us the key "notthesemaphoresiwaslookingfor".